Unleashing the Power of YOLO-NAS: A New Era in Object Detection and Computer Vision

The Future of Computer Vision is Here

What does it take to make a mark in the fiercely competitive world of object detection?

In this newsletter edition, I want to take you on a behind-the-scenes journey of how YOLO-NAS, a novel, groundbreaking object detection architecture that sets a new standard for State-of-the-Art, came into being.

Igniting Ambition

Our researchers started experimenting with applying the AutoNAC engine to the object detection task in March 2023.

Object detection, a highly competitive computer vision subfield. Many teams worldwide compete in this area, striving for state-of-the-art (SOTA) results.

The team had a singular goal: to develop an object detection model to distinguish itself in a highly competitive space. The team understood that even incremental improvements could be groundbreaking, like sprinters working on shaving milliseconds off their times. In their relentless pursuit of pushing the boundaries of performance, they embraced the challenge, knowing that each incremental increase in mAP and reduction in latency could revolutionize the landscape of object detection models.

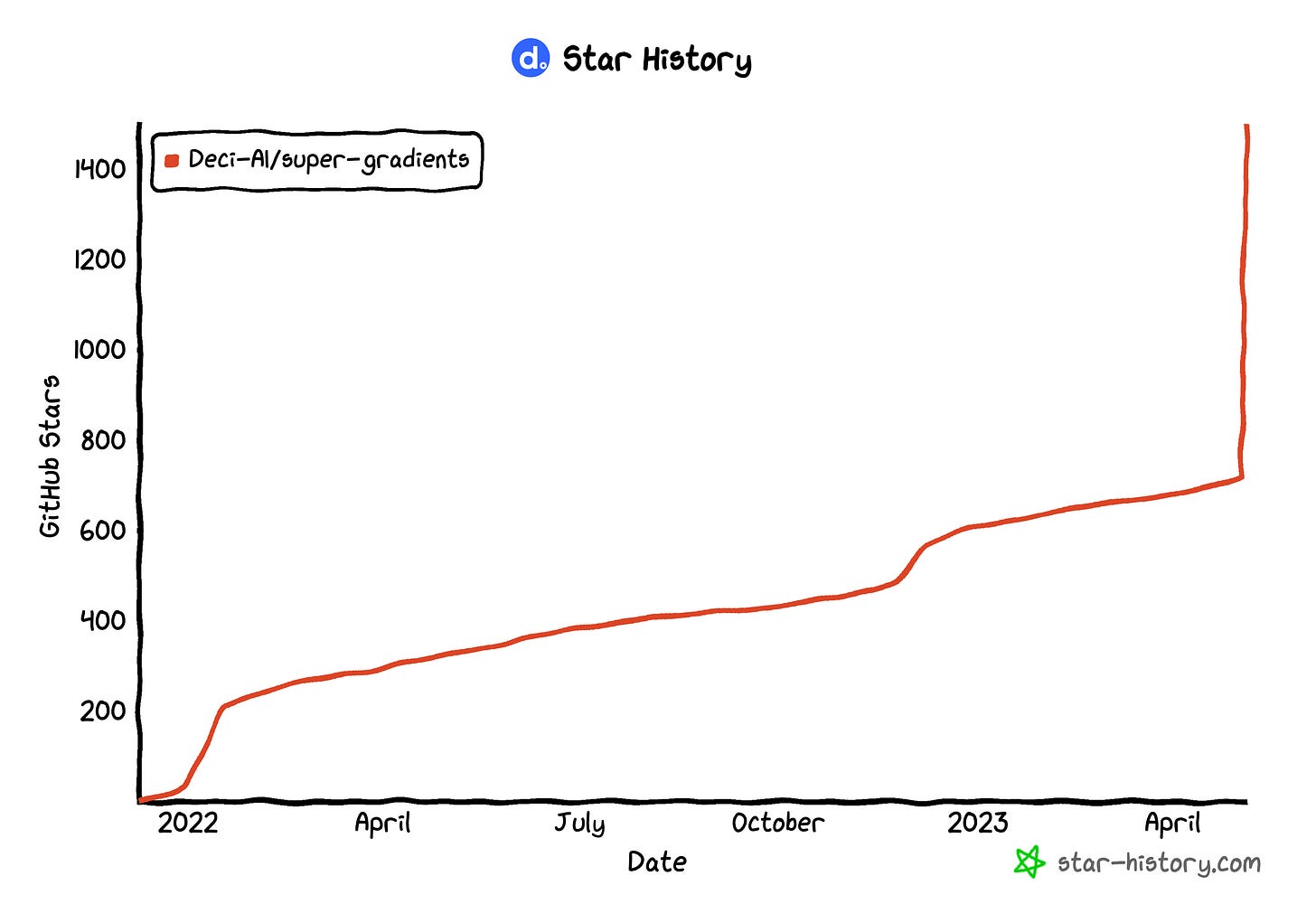

As a team, we also had a secondary aim: demonstrating the capabilities of Deci's AutoNAC engine and bringing exposure to SuperGradients, our powerful, intuitive, and unparalleled training library.

And if these graphs indicate anything, we’ve done a good job of what we set out to do.

Designing the Blueprint

Inspired by the success of modern YOLO architectures, our team set out to create a new quantization-friendly architecture - and it all started with Neural Architecture Search (NAS).

The first thing you need to do when performing Neural Architecture Search is define the architecture search space.

For YOLO-NAS, our researchers took inspiration from the basic blocks of YOLOv6 and YOLOv8. With the architecture and training regime in place, our researchers harnessed the power of AutoNAC. It intelligently searched a vast space of ~10^14 possible architectures, ultimately zeroing in on three final networks that promised outstanding results.

The result is a family of architectures with a novel quantization-friendly basic block: the small, medium, and large versions of YOLO-NAS.

With the foundation of YOLO-NAS firmly established, the team was ready to embark on the next critical phase: perfecting the training regime to ensure the full potential of these innovative architectures could be realized.

Interested in the technical details of YOLO-NAS? Read the technical blog post.

Perfecting the Training Regime

The team devised an advanced and innovative training scheme incorporating several elements, each carefully crafted to extract maximum performance from the model:

Pretraining on the extensive Object365 dataset.

Employing pseudo-labelled data to enhance learning.

Applying knowledge distillation from a pre-trained teacher model to improve performance.

After completing the training process, post-training quantization (PTQ) was applied, converting the network into INT8.

Testing the Model's Versatility

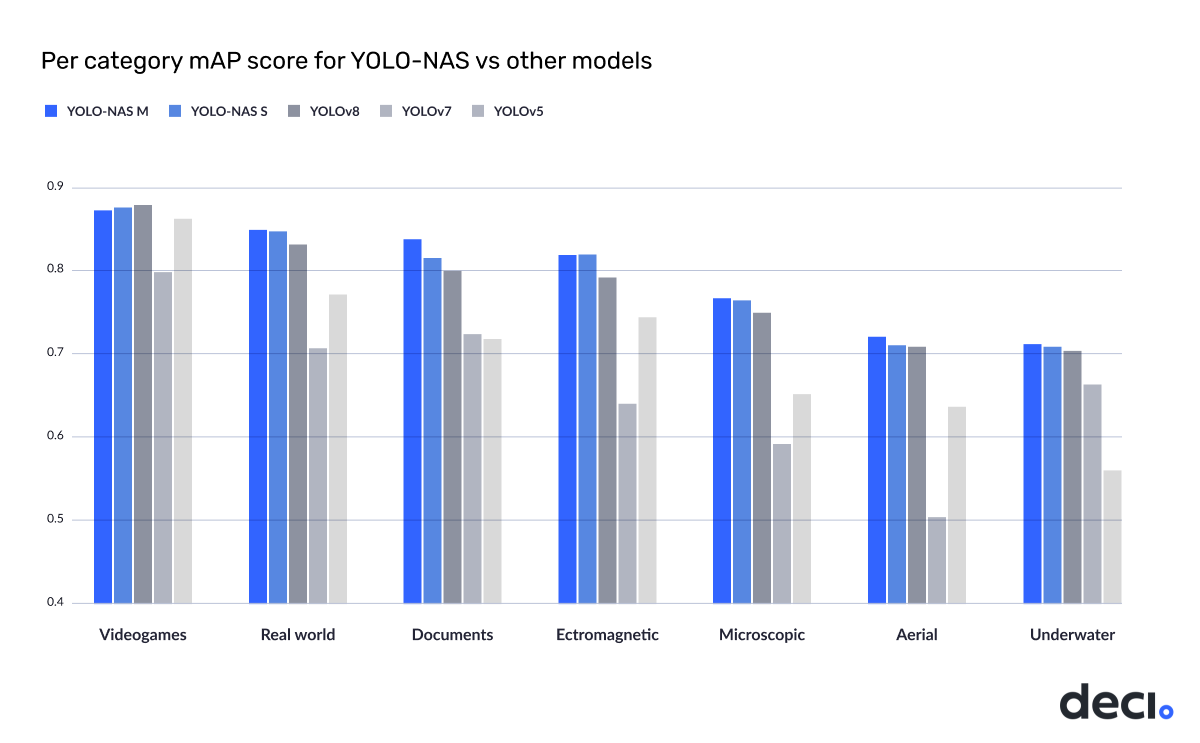

To demonstrate the prowess of YOLO-NAS, we tested it on the RoboFlow 100 collection, a diverse dataset ranging from video games to aerial images.

Our model outperformed close competitors like YOLOv7 and YOLOv8, proving its potential as a versatile and powerful foundation for transfer learning.

Overcoming Quantization Challenges

Quantization-aware training (QAT) can enhance a model's efficiency without sacrificing accuracy, but it's far from a walk in the park.

It requires quantization-friendly model components, intricate infrastructure, and precise adjustments to settings and hyperparameters. Thankfully, our model was designed with QAT in mind, and SuperGradients' user-friendly interface and expert guidance, we not only overcame these challenges but also improved our model's efficiency without sacrificing accuracy.

Tired of all the talk? Want to see YOLO-NAS in action? Check out this getting started notebook I’ve put together for you.

The Launchpad

I’m proud of the team’s achievements and the potential of YOLO-NAS, but our journey doesn't end here.

As we continue to explore the boundaries of object detection (and deep learning in general), we remain committed to pushing the limits and sharing our progress with you. Our model may not remain unbeatable forever, but our unwavering determination to stay ahead of the curve will keep us on the cutting edge of this dynamic field.

We’re not resting on our laurels.

We rely on your support to enhance the object detection game.

To achieve this, I urge you to subject YOLO-NAS and SuperGradients to stress tests, push them to their limits, and attempt to break them. Should you encounter any issues or have questions, all you have to do is open an issue on GitHub. SuperGradients is an open-source library, and if you're interested in contributing, please review our contribution guidelines.

I hope this behind-the-scenes look at our efforts has piqued your interest and inspired you to follow our journey.

Stay tuned for more exciting updates, and don't hesitate to reach out with any questions or feedback.

The Team Behind YOLO-NAS

The success of YOLO-NAS can be attributed to the hard work, dedication, and brilliance of the following individuals:

Research: Amos Gropp, Ido Shahaf, Ran El-Yaniv, Akhiad Bercovich

Engineering: Ofri Masad, Shay Aharon, Eugene Khvedchenia, Louis Dupont, Kate Yurkova

Product: Shani Perl

On behalf of the community, I thank you all for your hard work and for making YOLO-NAS a reality 👏🏽 🙏🏽!

Community Generated Content

It's impressive how quickly the community embraces and produces content with YOLO-NAS! I want to thank everyone who has shared their exceptional work so far. I know a few examples, but if you know of any additional ones, please share them.

• DagsHub showing our integration into their platform

• Nate Haddad integrating YOLO-NAS into Fast-Track. Check out his project on GitHub and don’t forget to give it a star ⭐️!

• Voxel51 on how to load predictions into their platform

• Nicolai Nielsen’s intro to YOLO-NAS video

• Chris Alexiuk with a Star Wars Day project using YOLO-NAS

• Code with Aarohi’s intro to YOLO-NAS video

• Muhammad Moin’s into to YOLO-NAS video

• Ritesh Kanjee from Augmented Startups blog post Rise of YOLO-NAS: Discover the 8 Features That Propel It to Object Detection Stardom!

• My starter notebooks on Kaggle

I’ll see you next week, cheers!

Harpreet